Introduction

In this writing I wish to outline a teaching philosophy based on a model of teaching with the concept of resilience in mind. What this means is a forging a framework as a dynamic and adaptive system of learning based on generating learning materials from a subject curriculum, reflecting on the work resources created and forming active links of information exchange between the reservoir of material and the learning goals of an individual student and student community, links that have the capacity to be changed, updated and/or given new avenues to further learning all the while being adaptive enough so that the student community is bolstered and made resilient themselves to make behavioral changes in response to outside shocks. This, I am convinced, is a reasonable way to ensure that local and global changes to learning routines are not disastrous to the overall protocol of education.

Resilient Model of Learning Delivery

Teaching can be defined as an action that conveys a meaningful exchange of information, using work done by the teacher and the student community, to give an impact that establishes links to educational material and students' ability to recall and use that material in a dynamic and coherent way to solve problems in a complex environment. The students must be linked with material, through education, on the stage of the complex environment itself and so the process must be a resilient one to external factors. Learning then is also about strengthening the links made in a given educational setting, i.e. a class, and making it transferable to another setting, such as a project, exam or work setting. The links we may want to make resilient are as varied as the curriculum itself, not limited to the ability to remember how to problem solve but to deconstruct information, construct models, share information or integrate new information.

These links are made resilient within the educational process as they are practiced, refined, subject to shifts in priorities based on local and global factors or in fact broken and replaced/restored as need arises. A resilient educational process therefore must have structure, with a plastic and versatile memory, where the conventional focal point of education is established by the nature of the external complex society. This varies quite a lot given the nature of different societies across the world and across time, goal orientation and/or grade validation may not be the focus or purpose of education in one society but may have a local basis for existence in another. This is a way of saying goals can vary in the structure of society and a student's own goals, for example learning a trade or skill by “learning by doing” rather than pure theory.

Any educational protocol that can call itself resilient must have its actions linked to outcomes that can take a form of measurable evidence to provide coherent feedback of the learning resources over the course of time. From raw evidence such as grading outcomes, sampling of students focus of learning materials (i.e notes, requests or download of study content over time etc.) as well as feedback a specific framework of reflection of learning outcomes, management of content, judgement and choices based on the delivery of the subject matter in class, examinations and projects can be created. This can form an adaptive memory of teaching a subject as the subject itself changes or as the student community changes or other unforeseen changes occur. In a resilient model, modifications to education delivery may be changed based on the measured changes, with certain links reflecting the action of delivery from a teaching resource to a student audience being broken, replace or switched from one strategy to another over time.

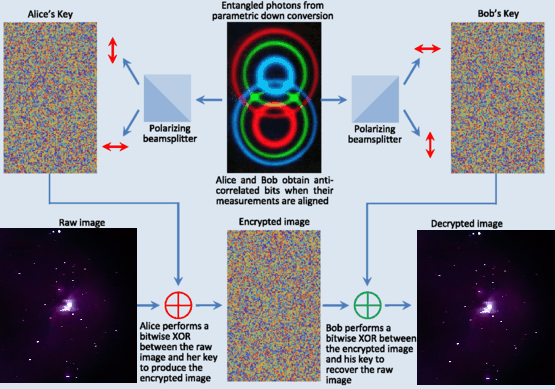

Model Diagram

In this model, perhaps changes, or indeed shocks, to any learning delivery system are based on local factors in the class setting, greater university setting or global factors due to unprecedented events.

- In local cases we could have 2 scenarios:

First, we could consider an individual student or student community engaged in learning behavior wherein they form a certain set of links to and from the learning resource material and the outcomes in a curriculum. Students as individual may see the curriculum as a well of sorts that has to be filled via resilient links that they would create to reach a certain level of mastery.

Overall, a student community would be given an expectation to reach a certain level by the end of a class or course, for example as listed in a class/course syllabus. The links established in the curriculum may be treated as equal in an unchanging background but in an instance of an exam being scheduled or a group project proposed the student community and Indvidual's will form a shift in behavior in what links in the curriculum well will be favored and thus the links themselves must be made resilient enough to bolster this change in priorities.

In a second example, a module may have an element that was in one year confined only to theory but based on a reflection generated by evidence gathered of learning outcomes in tandem with new learning resources being made available, old links from learning resources to class delivery are switched from being pure theory to theory and practical. This can cause changes that may, for example, go on to replace the theory component altogether in a fashion which may not have been considered without updating from a reflection.

- In global cases one can think of a very general set of scenarios:

These scenarios would be global in scope, caused by changes in society, technology economics, job markets and unprecedented occurrences due to changing demographics, natural and human induced disasters, diminishing returns on growth and increased societal pressures. A model based on resilience is not an abstraction in these cases and in several instances one can think of education resources for instance having to be made streamlined, efficient but highly resilient. For example, during a natural disaster a course that was in the practical and hands-on domain may be moved entirely online and remote. Lets say students in first year learning practical electronic circuit building.

Such a course may have had online simulation and theory resources were made previously available and would ideally have been made resilient enough to effectively be “switched on” and have the link between the resource and student acquisition strengthened via more active reflection so that the link would be resilient and be an avenue for learning goals that yield outcomes to feedback into further reflection and refinement over time.

Final Statement

The model I have described, and illustrated as a diagram below, is in many cases, fairly generative and is one that itself is a product of my own personal reflection and rumination about how to teach varied subject matter, mostly in the field of science, engineering and mathematics, in a highly complex and dynamic emerging local and global community. It is my sincere worldview however that resilient networks within institutional systems allow for resilient communities to be able to emerge and withstand local and global shifts behavior that is inherently risky but made safer to implement if the links between informational resources and active teaching is continually updated in an adaptive way.